It's crucial to understand that the testing pyramid must be tailored to a project's unique requirements. The team is responsible for fine-tuning the ideal testing architecture for their project.

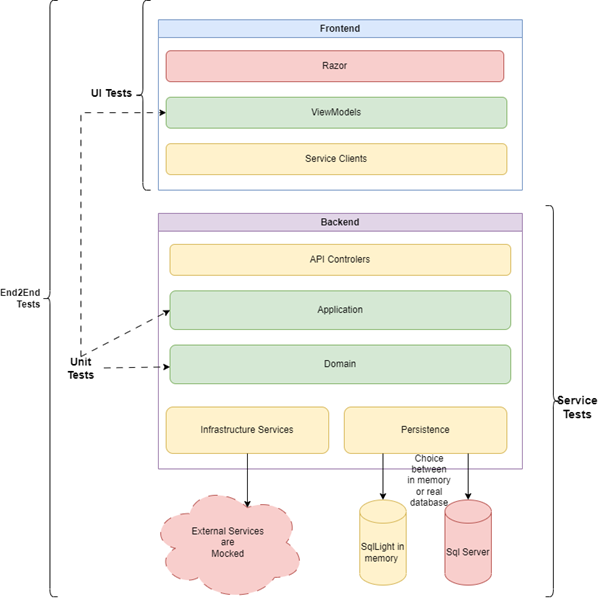

I'm offering an example of a basic test pyramid suited to the Onion architecture I commonly employ in my projects. My intent is to showcase what a testing pyramid might look like for DDD (Domain-Driven Design) projects. However, always consider that it might need further adjustments to fit specific project circumstances.

At the core of my testing strategy lie unit tests. These focus on individual components or functions and are quick to write and maintain. They ensure the fundamental components of our application remain robust.

It's worth noting that a pyramid doesn't stand solely on its base. While unit tests are foundational, they represent just one facet. Beyond unit tests, I implement Service tests to validate the entire backend's integration. These play a pivotal role in ensuring proper interactions with databases and external services. They're generally slower and more intricate than unit tests, which is why I house them in separate projects.

The term "Service test" originates from the idea that these tests operate from the service layer.

By segregating Service Tests from unit tests and placing them in distinct projects, I enhance the manageability and scalability of my testing approach. This separation allows for unit tests to validate individual components, while integration tests or Service tests verify interactions with databases and services.

Unit Tests At the pyramid's base, you find the unit test. This positioning implies a higher volume of unit tests compared to other kinds. A unit test is an automated test that targets a small, isolated code segment, typically an individual method or class. The unit test's objective is to confirm the correctness of this specific code piece.

The included diagram showcases typical software layers for an Onion/Clean architecture, with color-coding to highlight their testing suitability.

- Green: Layers such as ViewModels, Application, and Domain are primary unit testing candidates. They offer high value when tested in isolation due to their quick execution and maintainability.

- Yellow: The yellow layers are less efficient when tested standalone. Their unit tests often bring limited added value since they usually rely heavily on the layer below. Therefore, integration tests are generally used to verify the accurate functionality and interaction of these components.

- Red: Testing red layers usually results in fragile or sluggish tests that demand considerable maintenance. These layers typically offer lower testing ROI, making integration tests a more pragmatic choice.

Some attributes my unit tests adhere to include:

- Testing Single Units: A unit test evaluates one class or method at a time. This limited scope makes it simpler to identify issues, aiding codebase maintenance and refactoring.

- Testing Pure Logic: The primary purpose of a unit test is to confirm the class or method logic. This encompasses checking if the method returns expected results for given inputs or handles edge cases effectively.

- Mocking Dependencies: To ensure code isolation, unit tests shouldn't depend on external systems. For instance, a class interacting with a database should be tested with a mock or stub, not a real database connection.

- Fast Execution: Due to their foundational role, unit tests need swift execution to facilitate frequent runs, ideally after each code alteration.

UI Test

UI Tests validate user interactions and the application's interface. They replicate real user scenarios involving UI elements, ensuring the application operates as anticipated. My UI Test are done in isolation of the Service Tier, they exclusively test an application's presentation layer. As these test are performed in isolation you can see them as a specialized subset of unit tests. Using the MVVM pattern within my Blazor components enables to abstract away the UI logic and free the test from depending on UI specific details that make the test brittle. For testing the logic in the Razor views I typically use BUnit but try to avoid them as much as possible.

However, while UI Tests are insightful, they can be fragile and require regular updates, especially if UI elements undergo changes. They might not detect all UI issues, emphasizing the need for a comprehensive testing strategy that incorporates End2End tests for thorough application quality verification.

Service Tests

Occupying the pyramid's middle layer, Service Tests anchor my integration testing strategy. They center on the application's back-end components, ensuring that services, APIs, and business logic layers cohesively function. They also serve as a type of acceptance testing for complex logic. Due to their ability to test business flows without UI test fragility, Service Tests often yield the highest ROI, making them indispensable in my testing strategy.

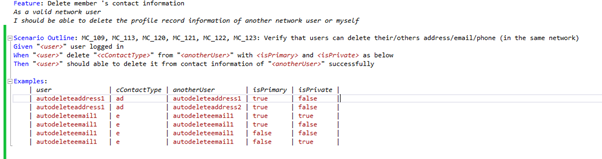

For Service Tests, I utilize Specflow and follow the BDD (Behavior-Driven Development) approach. This method bridges the gap between non-technical and technical team members, fostering shared application behavior understanding.

Domain-Driven Design's concept of Ubiquitous Language pairs seamlessly with BDD. It denotes a shared language among team members, ensuring mutual understanding of domain concepts and requirements.

SpecFlow, a renowned BDD tool, enables business analysts to describe expected application behaviors in Gherkin syntax. Developers then create step definitions, which are code pieces that map scenarios to test actions. By adopting a BDD approach with tools like SpecFlow, I ensure the Ubiquitous Language isn't just documentation but living specifications executable as tests. This promotes clear communication and a cohesive application.

Database: In-memory or Disk-based?

Efficiency and speed are paramount for all tests, especially when integrated into a continuous integration process on a build server. Database selection significantly influences this.

From my experience, an in-memory database like SQLite is typically more build-time efficient. However, there are instances where SQLite might be unsuitable, especially when integrating with complex databases with features like stored procedures. In such cases, a closer representation of the production environment, like SQL Server, is essential. To optimize tests without compromising build times, I suggest making tests configurable to different databases. For instance, SQLite can be used on the build server for rapid tests, while SQL Server is employed locally.

It's also crucial to emphasize that tests should mock external services, ensuring the application's logic is the primary testing focus.

Conclusion Structuring my test pyramid with unit tests at the base, Service Tests in the middle, and UI tests at the peak results in a comprehensive testing strategy. While UI tests play a role, Service Tests truly underpin a quality product. Combining these tests ensures both the individual components' integrity and the smooth interaction between the application's various tiers.